Welcome

Hey there, I'm Chris.

I’m a computing jack of all trades, master of none, but often better than a master of one. My knowledge and skills go properly full stack from hardware to frontend. PostgreSQL, Devops, Java are my strongest areas, but I’m just as comfortable fiddling with Ceph clusters, networking and web frontend.

I often find the problem I’m solving more interesting than the specific technology I’m using, as long as it’s Open Source.

Here you'll find my ramblings on various subjects as well as a number of side project I've got upto.

Recent Projects

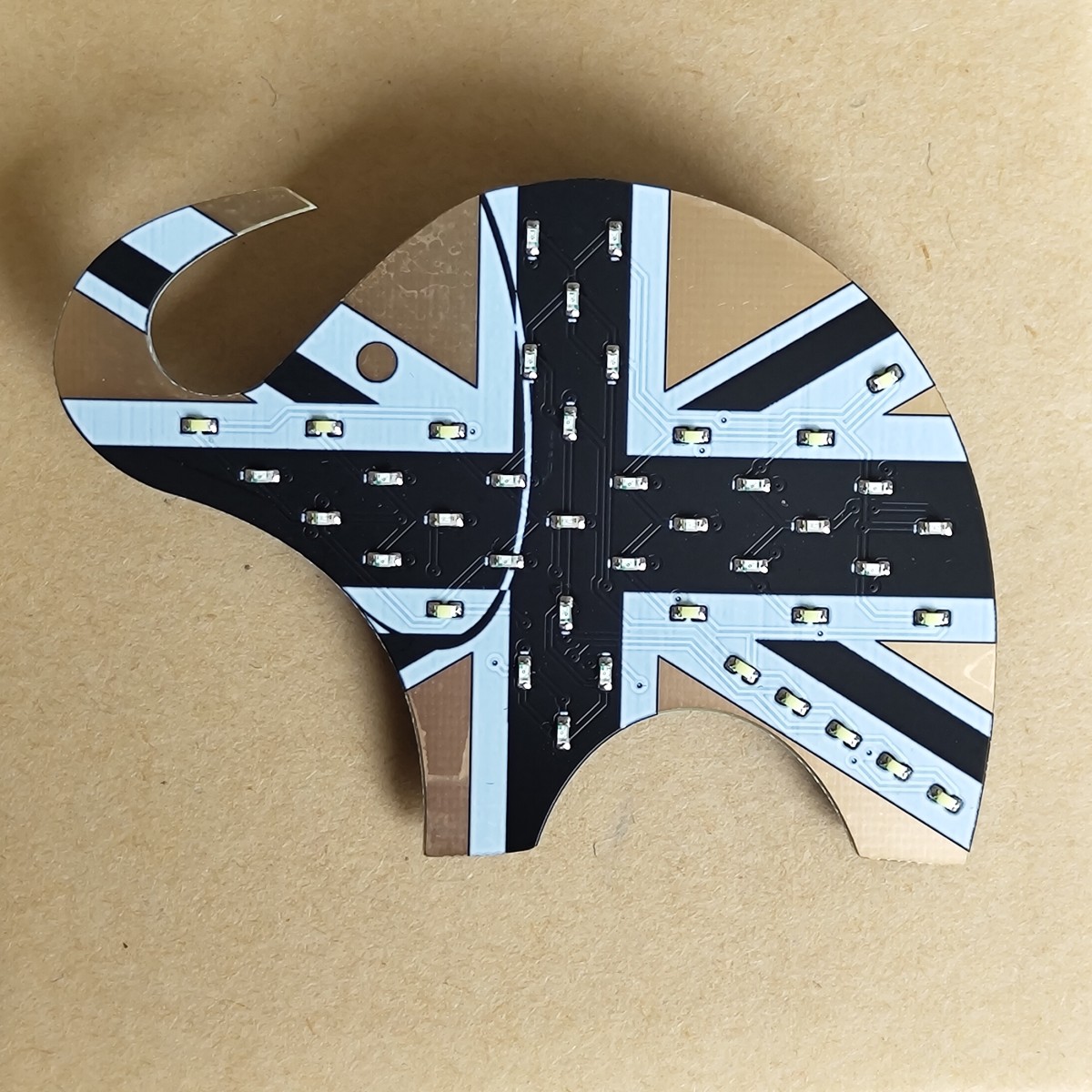

PGDay UK Elephant LED Badges

An elephant LED badge I created for PGDay UK, based from the logo I designed for the event and building on from a few other badges I've desgined and built.

Read more...

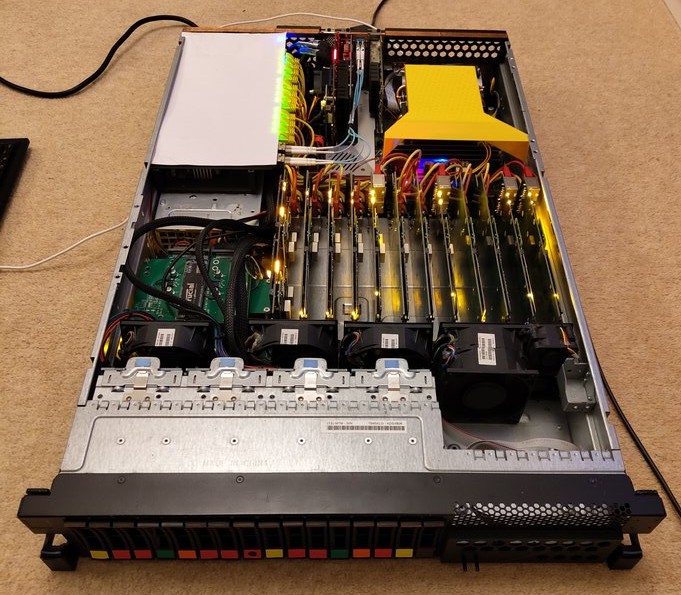

About Lowclus

Lowclus is a Raspberry Pi CM4 based hybrid cluster server that I've designed and prototyped. Central to the concept is that a cluster of smaller more power efficient servers can function and perform as well as a traditional solution and be more cost effective.

Read more...

Elephant LED Badge

A little elephant LED badge I created as a homage to PostgreSQL. Following on from the Cat badge that I previously designed, I wanted to create something PostgreSQL related, so based the design around an elephant.

Read more...Recent Posts

PGSQL Phriday 013 - Usecases and Why PostgreSQL

I'm the host of PGSQL Phriday blogging event for October 2023. I've always been much more into the practical side of engineering. Caring far more about what I can build with tools, rather than which tool I'm using. The challenge for Friday, October 6th 2023, publish a post on your blog telling a story about what you (or your team, client) built with PostgreSQL and how PostgreSQL helped you deliver. I'd love to read about the weird and varied things that people are using PostgreSQL for. If you think your usecase is boring, I'm sure it will be of use to someone. Plus you can always focus more on the story and how PostgreSQL helped to deliver a project, or could have, or didn't!

Read more...pgVis - Simple Visualisations For PostgreSQL

pgVis is a PostgreSQL extension for building simple visualisation dashboards with SQL. pgVis aims to make it easy to express data visualisations directly from SQL queries. Letting you quickly visualise some data for adhoc reports in psql or to build and share reporting dashboards in your organisation via pgvis-server. Either way, pgVis is designed to be PostgreSQL centric and to fit with your existing database workflows.

Read more...PostgreSQL - Not Just Relational

The extensibility of PostgreSQL is one of it's biggest advantages, making it capable of so many wide and varied usecases. Something that I've leveraged a lot on various projects, so much so that you probably don't need another database. Ryan mentions that it was extensions like PostGIS and hstore which brought him to PostgreSQL initially. For me it was TSearch2 (yeh, it was around 2008, it later got merged into core), then taking advantage of PostGIS, PL/Proxy, PGQ, JSONB and more over the years.

Read more...A PostgreSQL Backup Journey

I figured for PGSQL Phriday 002, that telling the story from when I looked after an energy insights database would be the most interesting way to talk about PostgreSQL backups. During the course of the project we used three differing backup tools and approaches, mainly driven by the ongoing exponential growth of the system. I also want to cover my biggest learning from that project. Which was something David Steele said at pgconf.eu: Make recovery part of your everyday processes.

Read more...Isokon Gallery

The Isokon Gallery is a small visitor centre for the Isokon building in north London. For anyone into modernist architecture it's well worth a visit, staffed by volunteers who are really pationate about the building, they made the experience very memorable.

Read more...Langham Dome

The Langham Dome is a unique little museum in the site of a former WW2 Anti-Aircraft gun training dome. The dome was state of the art in WW2 with an innovative projection mechanism, showing footage of planes and a mounted gun which projected a crosshair over the footage.

Read more...